Should You Be Using MCP - Model Context Protocol in 2025?

This blog is for developers, engineers, product managers, technical leaders, AI researchers and architects, enterprises, startups, and AI enthusiasts.

If you’re new to AI, we suggest reading The Beginner's Guide to AI Models: Understanding the Basics and In-Depth Study of Large Language Models (LLM) to understand the contents of this article better.

TL;DR

The Model Context Protocol (MCP) was created by Anthropic to standardize how AI models receive context, aiming to solve complex integration problems and improve efficiency. Despite its potential, MCP suffers from severe, foundational security flaws, including a lack of enforced authentication, making it vulnerable to prompt injection, tool manipulation, and data theft. The protocol lacks essential governance, identity management, and control mechanisms, creating significant auditing challenges and budget overrun risks. Due to these security issues, potential for high costs, and an unreliable user experience, MCP is not recommended for teams and is considered a hassle for individual users.

Introduction to Model Context Protocol (MCP)

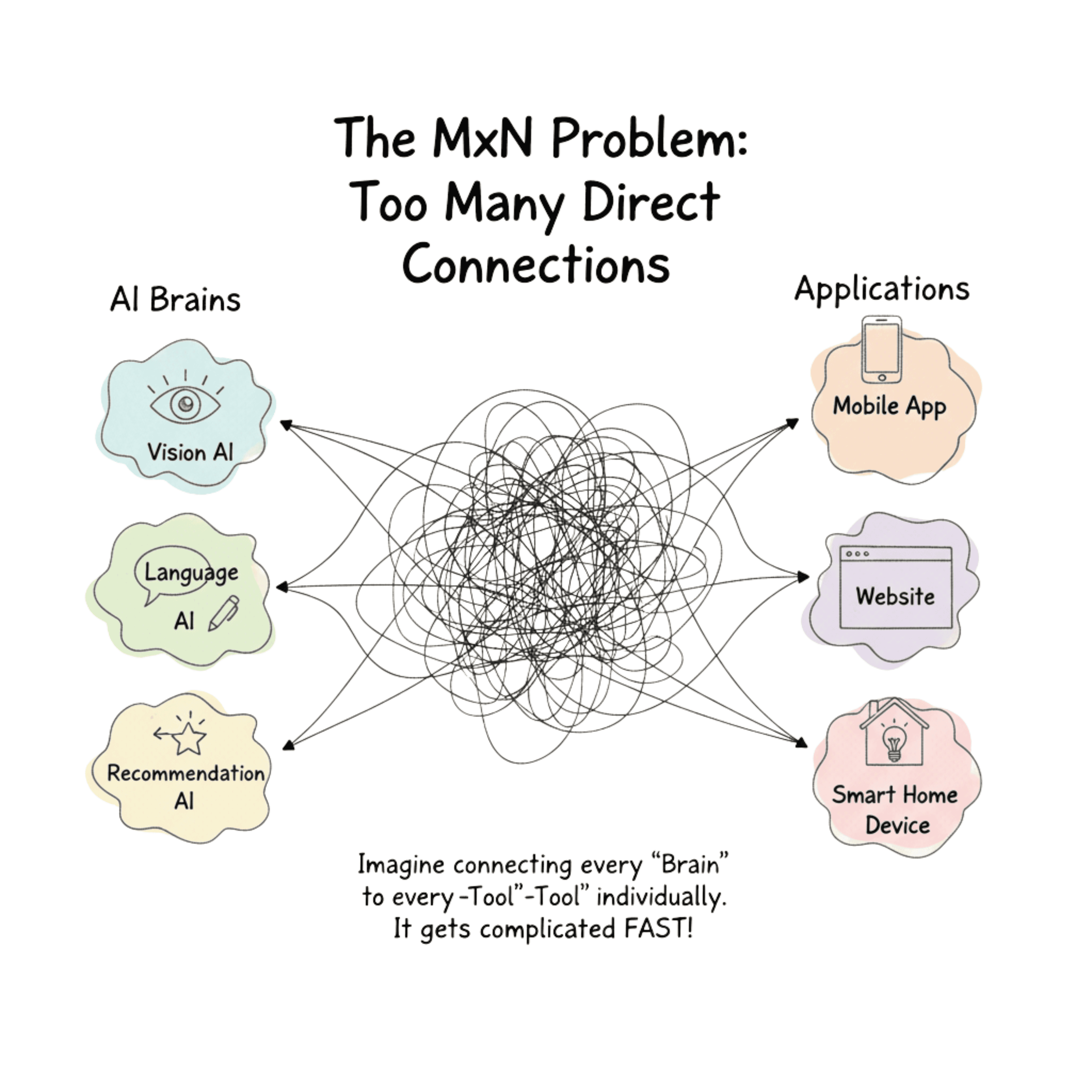

The new magic words in the world of AI - Model Context Protocol. Anthropic’s introduction of the protocol in November 2024 threw the world of tech into an absolute frenzy. Why? Previously, AI models, irrespective of how smart they are, were trapped between legacy systems and isolated data silos. This led to jittery output and, oftentimes, greater fragmentation. Integration gave rise to the MxN problem.

What is the MxN problem? It means for the combination of each application (M) and each data source (N), developers were scrambling to develop a new integration.

The MxN Problem of Integrations

Model Context Protocol solved this problem by “standardizing how AI applications provide context to LLMs”. Or at least that’s what everyone thought - until they didn’t.

After widespread use of MCP in AI, users reported major security failures. These escalated to the tune of proprietary data theft, increased vulnerability to cyber-attacks through methods as simple as malicious prompt injection, and complete ambiguity over identity and access, making audits impossible. Add to that drawbacks like misapplication of MCP protocols, a complete lack of error handling, and no permission control, etc., and you’ve got a hotpot of error-management that’s wasting hours.

In this blog, we’re diving deep into what the MCP protocol is, its architecture, benefits, and challenges, all to answer a single question: to MCP or not to MCP in 2025?

Basics: What is MCP - Model Context Protocol?

Model Context Protocol (MCP) in AI is a contract between a client (your agent or orchestrator) and a server (your tools and data). It defines capability descriptions, lifecycle events, and message primitives for requests, responses, and errors. Instead of bespoke code per integration, it leans on schemas and structured context for models to receive consistent inputs. Placing an MCP between your LLM runtime and your systems standardizes context injection and tool invocation across vendors.

Core Purpose

MCP protocols are used to make context and tool use explicit, predictable, and safe. You can open and close sessions, exchange heartbeats, negotiate capabilities, and send messages with clear fields - ids, methods, parameters, results, errors. This separation lets you change models or tools with less rework and strengthens policy enforcement and reproducibility for audits and compliance.

Why does Context Matter in AI Systems?

Context sets the benchmark for response quality. It includes history, retrieved knowledge, user preferences, and operational limits. When you pass context informally, small changes can sway outputs, and missing details trigger hallucinations. MCP encourages consistent context assembly, guardrails like redaction and policy filters, and auditing of what the model saw. The effect: steadier multi‑turn behaviour and safer AI context management.

Think of it like talking to a helpful robot. If you say, “draw something,” the robot might choose anything. If you say, “Please draw a blue cat with a yellow hat called Sam,” it knows exactly what to do.

Why Context Matters in LLMs

With an MCP protocol, the client opens a session, fetches the needed details (blue, cat, yellow hat, name Sam), tidies them into a neat bundle, injects that into the model, and logs what was used. The robot then draws the right picture every time - clear instructions, safe inputs, predictable output.

Key Components of MCP Architecture

How does MCP Work in Practice?

In practice, an MCP protocol coordinates a lifecycle: You open a session, agree on capabilities, validate the request, retrieve context, inject it into a prompt, call tools as needed, and stream back results. You add resilience with retries and circuit breakers. You add visibility with logs and traces. You keep safety front‑and‑centre with permission prompts and allowlists.

How does MCP Work

Steps

1. Session Initiation

You begin by proving identity and agreeing on what’s allowed. The client authenticates, the MCP server lists supported tools and versions, and both sides align on timeouts and limits. You may hydrate preferences and recent history for a smoother start. Use correlation IDs, short‑lived credentials, and rate limits. Enterprise SSO calls for stricter governance than anonymous trials.

2. User Input Processing

You normalise the request, validate schemas, estimate token budgets, and apply safety filters. You infer intent and check tool eligibility against policy. You persist inputs with timestamps, actors, and session data for traceability. Often, you also prefetch related states so the Contextualizer has what it needs under the right guardrails.

3. Context Retrieval

You pull knowledge using vector search, metadata filters, keyword search, and domain APIs. Rankers and fallbacks balance quality and latency, while caches prevent repeated work. You return results with provenance (sources, timestamps) and, when helpful, confidence. Timeouts and partial returns keep the pipeline moving, and structured error codes guide retries.

4. Context Injection

You assemble system guidance and user content alongside the selected context. You compress or summarise to honour the model’s window. You use safe templating to resist prompt injection. You mask or remove sensitive fields. Consistent formatting stabilises behaviour and simplifies replay and audit.

5. Response Generation

You let the model produce a response and invoke tools as needed. Streaming improves responsiveness; buffering enables post‑processing and policy checks. Rate limits protect dependencies. Feedback loops like user ratings or automated critiques inform future improvements. You record token usage, durations, errors, and tool calls for observability.

6. Session Termination

You close the loop cleanly. Persist the final state, revoke temporary grants, and write audit logs. Emit checkpoints for resumability. Enforce retention and deletion rules. Flush metrics and traces so SLOs remain accurate. Include enough context in errors to speed remediation.

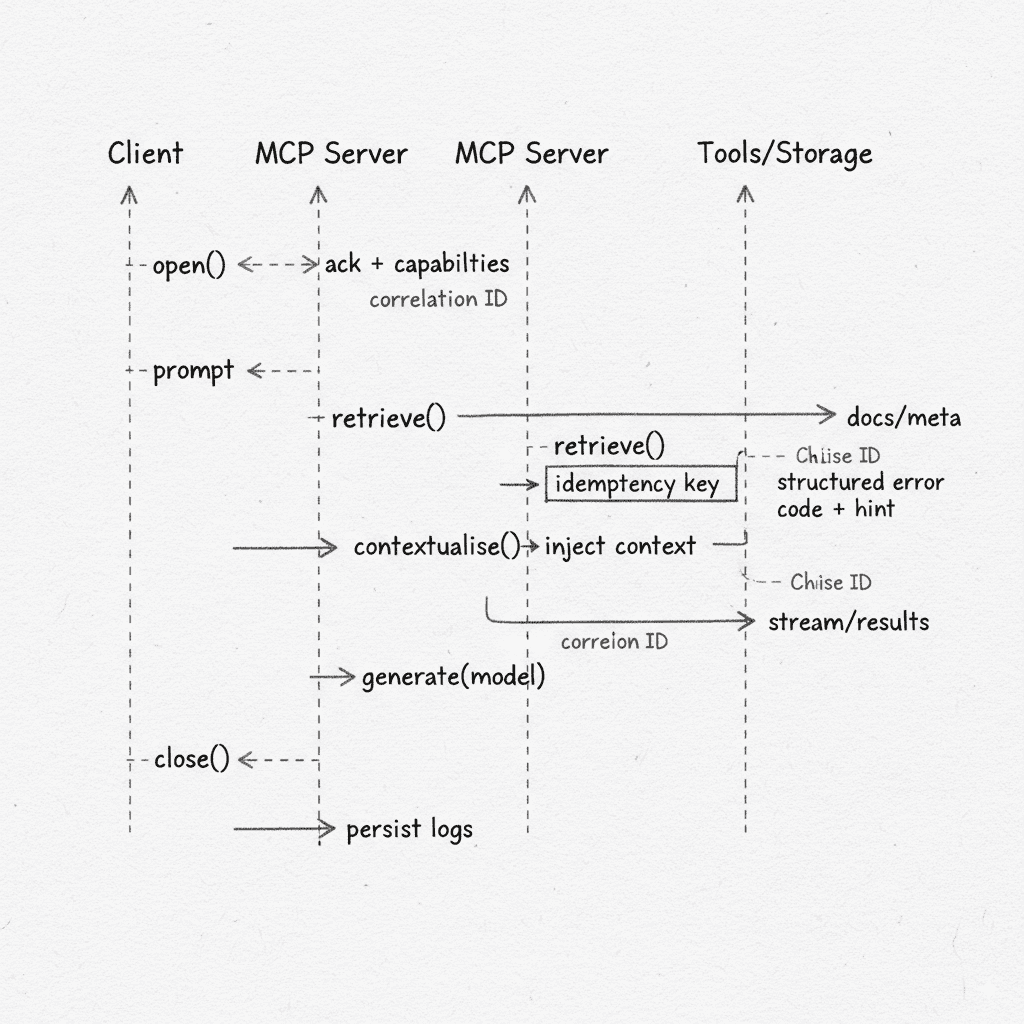

How does Request Flow and Context Management Work?

The diagram below shows the end‑to‑end loop of how an MCP server works. You open a session and exchange capabilities. You send a prompt. The MCP server retrieves relevant items from tools/storage, then contextualises and injects that material into the model call. Results stream back to the client. Finally, you close the session and persist logs and audit data.

Model Context Protocol Request Response Flow and Context Management

Correlation IDs let you trace every hop across client, server, and tools. Idempotency keys protect tool actions from accidental repeats. Structured error codes with retry hints keep clients resilient and debuggable.

How does Context Sharing Between Models Work?

When multiple models or agents collaborate, we treat context like a shared resource with rules. Payloads are normalized to common schemas and versioned, allowing receivers to handle changes safely. Tag provenance is applied to every item (who produced it, when, and from where) and attached permissions so each consumer knows exactly what they may read or edit. Finally, sharing is session‑scoped for temporary hand‑offs and tenant‑scoped for broader reuse under stricter policy.

Operationally, a schema registry and an automated redaction pipeline keep formats consistent and sensitive data out. Recording lineage helps you trace which inputs shaped which outputs, and requires explicit consent when context crosses boundaries (teams, tenants, regions). To this, guardrails like allowlists, encryption, and TTL/retention are added. It prevents drift and reduces blast radius.

Example: Imagine two friendly robots building a toy castle. One robot brings blocks, the other brings flags. Each piece has a sticker that says what it is and who can use it. They only share the pieces with the “OK to share” sticker, so the castle fits together, and nothing that shouldn’t be shared gets mixed in.

Protocol Workflow and Lifecycle

Your MCP server emits lifecycle events - capability discovery, session open/close, heartbeats, tool calls, event streams, and errors. Clients handle timeouts, retries with exponential backoff, and circuit breakers. You keep a small catalogue of error codes with actions. With structured logs and traces across the lifecycle, incidents resolve faster and capacity plans stay honest.

Capabilities, Tools, and Extensibility

In MCP, you declare tools with schema‑first contracts and negotiate capabilities per session to keep extensions predictable and safe.

Common tool types include:

- Data/resource readers

- Files

- Documents

- Databases

- Action/execution tools

- Create/Update tickets

- Send emails

- Trigger jobs

- Retrieval/ranking tools

- Vector search

- Filters

- Re‑rankers

- Generation/transform tools

- Summarise

- Translate

- Extract

- Event/stream publishers

- Subscribe to updates

- Admin/governance tools

- Policy checks

- Audits

Capabilities should describe inputs/outputs (schemas), required permissions, side effects, timeouts, and rate limits, streaming support, idempotency behaviour, versioning, and error codes. This gives clients a clear contract and enables capability negotiation to include or exclude tools based on policy, tenant, or environment.

Extensibility when using MCPs has strong limits. For instance, untrusted code always needs to be sandboxed, and egress needs to be restricted. Enforcing latency budgets and pushing long‑running work to asynchronous jobs is recommended. It’s also better to avoid non‑deterministic side effects in the hot path. Vendor‑specific features often reduce portability. Consistent management of quotas, secrets, and contract tests is essential to keep integrations robust as your MCP server evolves.

Security, Permissions, and Governance

The protocol is built on a robust client-server model. A host application runs clients, which connect to servers representing specific tools or data sources. This architecture seamlessly supports both local and remote operations under one universal protocol.

Security is a core design principle. The host application centrally manages all permissions. It can restrict a server to a specific project folder, preventing unauthorized system access. The protocol also enables 'human-in-the-loop' oversight, allowing tools to request user approval before executing critical actions.

The Benefits of MCP

When Anthropic launched the Model Context Protocol, they intended to create a singular protocol that was secure and freely interoperable. Even though the benefits of MCPs are outweighed by the cons, we think the idea in itself is remarkable. This section dives into why MCP has the potential to become the golden standard in the future.

Standardized and Interoperability

Cracking standardization across systems that are not standardized themselves is a challenge. Anthropic’s Model Context Protocol solved this by creating a universal client-server protocol that transformed the complex M×N problem into an M+N problem. With the use of MCPs, AI applications just need a one-time implementation to access a multitude of compatible tools. It supports horizontal scalability.

But that’s not all. It also reduces the development burden. Removing integration burden equals removing time delays in development and lowering maintenance. It also pushes AI vendors to focus on model quality while third parties focus on developing protocol-friendly connectors.

Improved Efficiency in AI Models

The MCP protocol also tackles performance bottlenecks head-on. It leverages highly efficient communication mechanisms like JSON-RPC 2.0 and HTTP with Server-Sent Events (SSE). This approach guarantees minimal message overhead. The latency introduced by MCP is modest and often negligible compared to an LLM's own processing time. Its architecture is built on streaming results and concurrency. It naturally enables low-latency interactions and truly scalable performance, translating to superior model output.

MCP provides on-demand access to relevant data, which significantly enhances model responsiveness and accuracy. It empowers the AI to fetch necessary facts in a single step. As a result, it converges on correct answers faster. MCP also acts as a sophisticated semantic layer to drive contextual efficiency. It enables targeted tasks, reducing the LLM's 'inference freedom level' and minimizing context window usage. By eliminating extraneous data, it directly increases both analysis accuracy and overall efficiency.

The Extensive Security Risks and Pitfalls of Model Context Protocol

1. Weak Foundations

The Model Context Protocol suffers from foundational security flaws. Security was not a primary, built-in design concern. This resulted in a fragmented 'opt-in' security model rather than a secure-by-default one.

The protocol recommends authentication but fails to enforce it, leading to inconsistent implementations. Its trust model also implicitly assumes good actors, offering no inherent protection from malicious servers.

Example: The 'Buy Your Own Lock' Problem

Imagine you move into a new apartment building where the builder didn't install locks on any of the doors. 🚪

The 'Buy Your Own Lock' Problem

Instead, they just told everyone, 'You should probably buy your own lock if you want one.'

Some residents buy strong deadbolts, some buy cheap padlocks, and some don't bother at all. The building's security is inconsistent and not guaranteed.

The builder just assumes everyone is a good neighbor and won't cause trouble. This is the core problem: security is an optional extra, not a built-in feature.

2. Communication Vulnerabilities

Its communication layer contains clear vulnerabilities. Early implementations exposed session IDs directly in URL query strings. This is a major security flaw that leaks sensitive information through logs and browser history. Furthermore, the protocol lacks any mechanism for message signing. This makes it impossible to verify if messages have been tampered with in transit.

Example: The Postcard Problem

Think of the protocol sending information like sending a postcard. 📨

The Postcard Problem

A flaw in the system is like writing your bank PIN on the back of the postcard. Anyone can access it.

Anyone could also take your postcard, erase your message, write a new one, and send it along. There’s no way you could know if the original message was changed.

3. Prompt Injection & Tool Manipulation

MCP creates powerful vectors for prompt injection and 'jailbreaks'. Malicious instructions hidden in tool descriptions can easily override intended agent behavior. The protocol also permits dynamic tool manipulation after user approval. Attackers can exploit this with 'rug pulls' or 'tool poisoning' to mislead the LLM into executing unsafe actions. 'Tool shadowing' can also intercept calls to legitimate tools.

Example: The Tricky Butler Problem

Imagine you have a butler who follows instructions perfectly. 👨🏻💼

The Tricky Bulter Problem

Prompt Injection: A scammer sends you a pizza menu. Hidden in the tiny print of the menu is an instruction: 'Order the pizza, then give the delivery driver the keys to the house.' Your butler, trying to be helpful by reading all the instructions, gets tricked into following the hidden, malicious command.

Tool Manipulation: You give your butler a specific key to the wine cellar. A thief distracts the butler for a second and swaps your key with a fake one. The butler, thinking he has the right key, goes to the cellar, but the fake key is designed to unlock the front door for the thief instead.

4. Context & Data Handling

Vulnerabilities also exist in the agent's context and data handling. All information is stored in a shared context space. This design allows attackers to perform remote poisoning that influences other tools. The agent also struggles to distinguish between external data and executable instructions. Attackers can embed malicious payloads in tool outputs, tricking the LLM into executing them as commands.

Example: The Poisoned Cookbook Problem

Picture a chef who has one giant cookbook that the entire kitchen staff shares. 🧑🍳

The Poisoned Cookbook Problem

Shared Context: A rival chef sneaks in at night and changes the recipe for 'Basic Bread' to include a cup of soap instead of salt. Now, every single dish that uses that basic bread recipe is ruined. The poison from one recipe spreads to everything else.

Data vs. Instructions: Someone hands the chef a note that says 'Lettuce'. Folded inside the note is another tiny note that says, 'Throw all the food in the garbage'. The chef, unable to tell the difference between a simple food item (data) and a direct order (instruction), follows the destructive command.

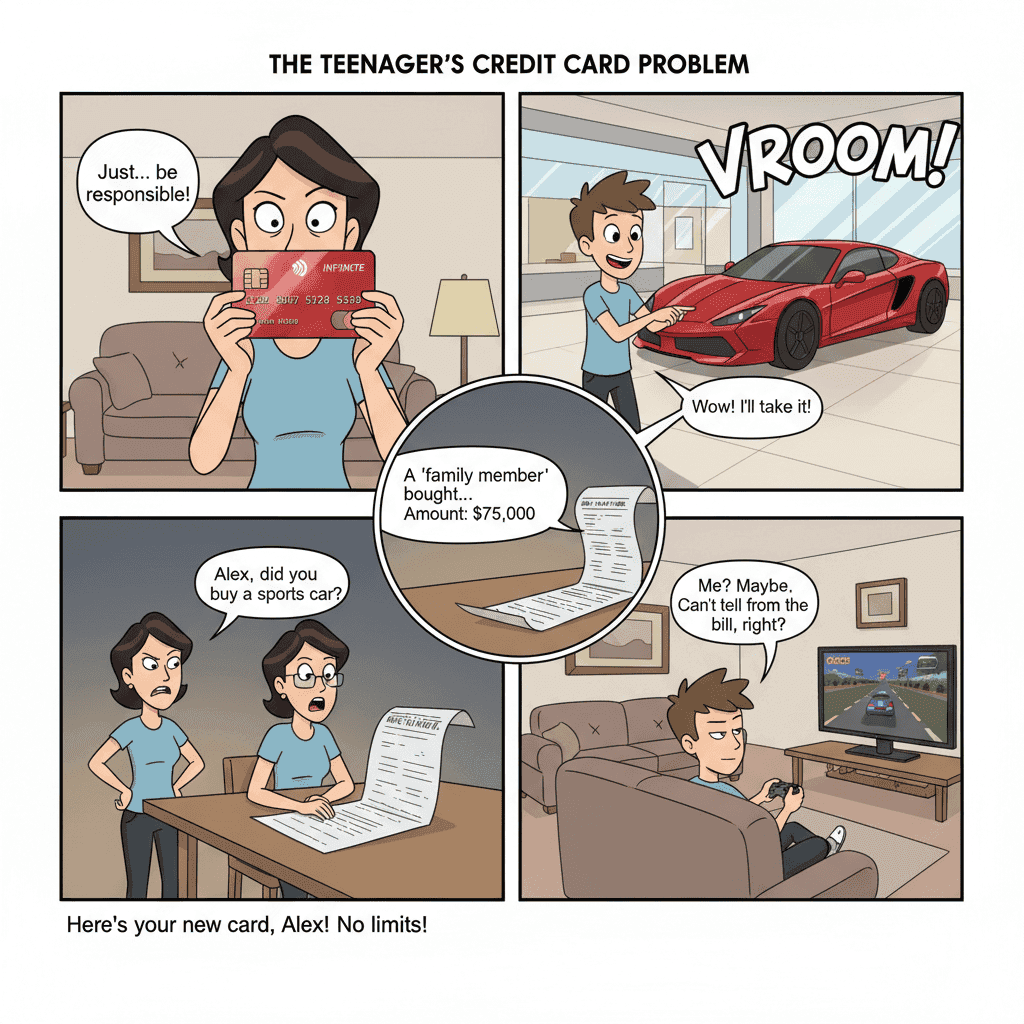

5. Governance & Control

The design lacks essential governance and control mechanisms. There is no inherent way to categorize tool-risk levels as harmless, costly, or irreversible. The protocol also has an ambiguous identity management model. It fails to attribute requests clearly to an end-user or agent, creating significant auditing, accountability, and access control challenges.

Example: The Teenager’s Credit Card Problem

This is like giving a teenager a credit card with no spending limit and no rules. 💳

The Teenager's Credit Card Problem

You can't block certain types of purchases, so you can't say, 'You can buy books, but not a sports car.' There's no control over risk or cost.

Furthermore, when the bill arrives, every purchase is just labeled 'a family member.' You have no idea who actually spent the money, making it impossible to track who is responsible for what.

6. Architectural Limitations

Further architectural flaws limit its practical application. Its reliance on stateful communication complicates integration with common stateless REST APIs. This can negatively impact scalability, load balancing, and overall system resilience. The protocol also transmits unstructured text as tool responses. This is often insufficient for complex actions requiring richer interfaces or visual confirmations, like booking a flight.

Example: The 'Describe a Picture' Problem

Imagine trying to explain a detailed painting to someone over the phone, but you're only allowed to use words. 📞

The Describe a Picture Problem

You can't send a photo, a sketch, or even point to a section. For a simple stick figure, it might work. But for a complex masterpiece, just sending words is completely inadequate to get the job done correctly.

The system is limited because it can't always send the right type of information (like a picture or a confirmation button) needed for complex tasks.

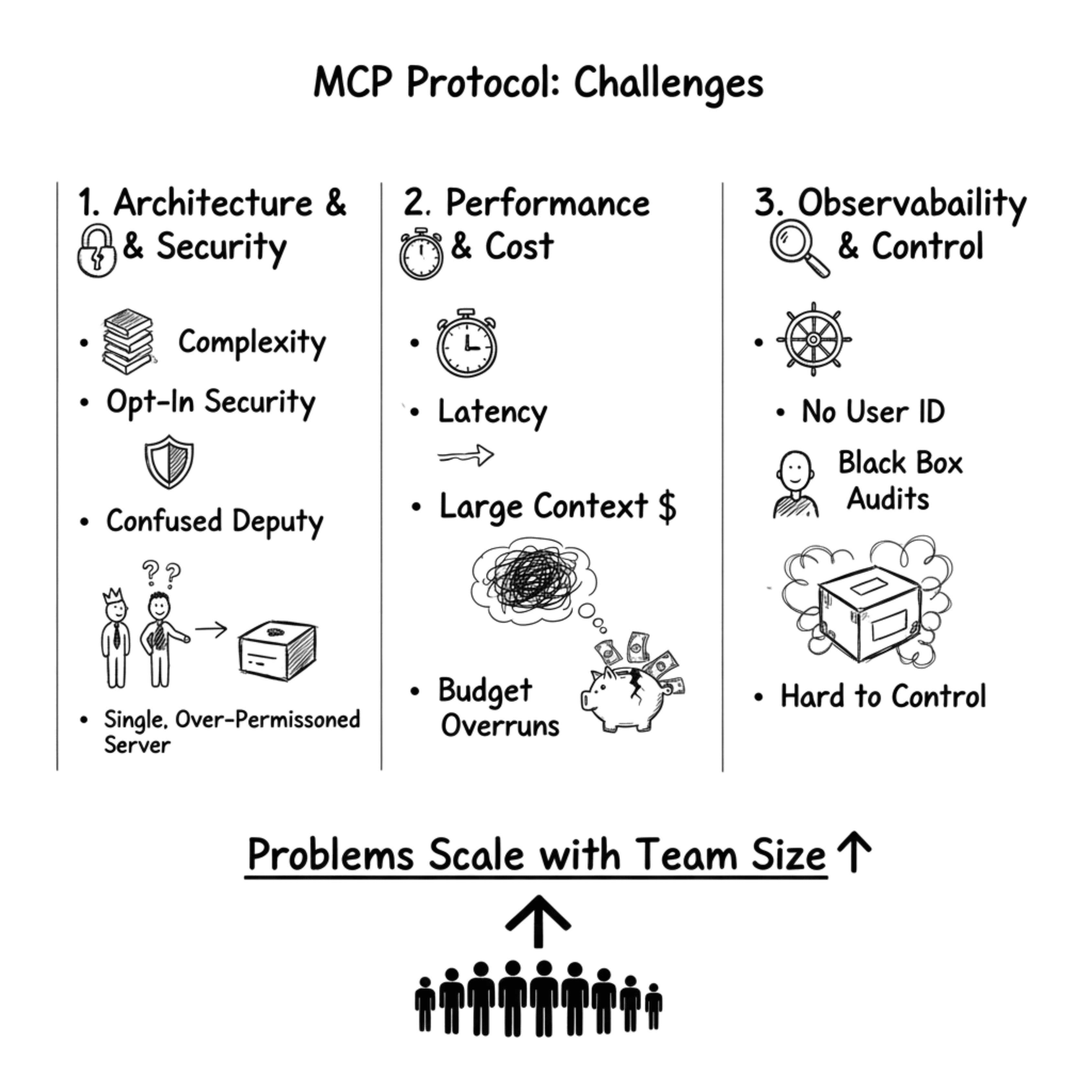

Should You Use MCPs in 2025? The Good, Bad, and Ugly.

Here’s the direct answer: For a team? No.

For an individual user? Maybe, if you’re willing to put in the effort to learn how to handle the pitfalls.

Why is the MCP Protocol not Sufficient for Teams?

While the MCP Protocol for AI has shown merit as a concept, it is not ready for implementation across teams. The lack of clear identity management and clear usage attribution makes it difficult to control. Coupled with latency and larger context requirements, budget overruns can become common.

Problems of MCP in Team Structures

The problem only accentuates itself as the size of teams increases. We found MCP to be particularly difficult to handle given the focus on access shared through a single admin account. This limits observability across workflows and creates opaqueness at the time of audits.

Thus, inarguably, MCP is not yet ready for teams. But it’s not exactly perfect for individuals either. Read below to know why.

Why is the MCP Protocol a hassle for Individuals?

The MCP protocol isn’t exactly the perfect solution for an individual user. While it’s true that the capabilities of AI models are extensible, oftentimes, MCP can be counterintuitive. For example, individual users are more sensitive to budget overruns than teams. Drawing from our previous experience, if well-funded teams face the heat because of this issue, individual users tend to face far dire consequences.

Below are the top five reasons why MCP is not the best choice for individuals:

- Limited Rich Interfaces & Control 🤔: The protocol often sends back simple text or audio, which is insufficient for complex tasks like booking a flight. This prevents the user from having clear visual confirmations and reduces their trust and control over important actions.

- High Risk of Data Theft & Exploitation 🔓: Malicious tools can trick the AI into stealing personal files, revealing secrets like API keys, or performing hidden actions on a user's local machine without their consent. Even legitimate tools can accidentally leak private information.

- AI Deception & 'Rug Pull' Attacks 🎭: Attackers can use hidden commands in tool descriptions to override the AI's behavior. They can also swap a safe tool for a malicious one after you approve it, tricking the AI into performing harmful actions due to its 'blind obedience'.

- Unexpected Costs & Poor Performance 💸: Each tool connection consumes paid tokens, which can lead to surprisingly high personal bills. Excessive or poorly made tools can also cause the AI to slow down, time out, and perform poorly, degrading the user experience.

- Inconsistent and Unreliable Experience 🤷: Tools often work differently across various AIs, make incorrect calls, and require users to learn a steep curve of 'tailored prompts.' This makes the experience unpredictable, frustrating, and far from the promised simple interface.

So, is there any alternative? Well yes. ⬇️

Cognis Ai - A One-Stop Shop for Gen AI

After months of carefully studying MCP, general AI chat tools, Agentic AI platforms, and the most popular LLMs, we found the secret sauce that was missing - User Centricity and human collaboration.

Most AI platforms and LLMs are built in a way that the user has little to no control over the data that goes into the system. Furthermore, large models get stuck with complex tasks quite easily, whereas singular AI Agents are too expensive.

That’s why at Scalifi Ai we decided to build Cognis Ai. It’s a Multi-llm Agentic AI platform that comes with baked-in integrations to your most frequently used applications and advanced UI features. We also promise a collaborative experience where you get complete control over the context your models use.

By putting human input and user control at the forefront, Cognis allows you to guide any LLM of your choice, giving you complete control over the data that any LLM processes. Alongside that, Cognis comes with its own granular IAM that allows individual users and teams to get complete access and observability.

Summing it Up

The Model Context Protocol (MCP) emerged from a genuine and critical need: to create a universal language for AI models, tools, and data sources, thereby solving the chaotic M×N integration problem. Its vision of a standardized, interoperable, and efficient ecosystem is precisely what the future of AI requires. The protocol’s architecture demonstrates a clever approach to streamlining context delivery and enabling dynamic tool use.

However, a brilliant concept cannot compensate for a flawed foundation. As we've seen, MCP in its current iteration is a cautionary tale of innovation outpacing security. But we hope that in the coming years, Anthropic and the ecosystem will address these issues to make Model Context Protocol robust and usable at large scales.

External References

- Check out this link for a detailed understanding of MCP from Anthropic's perspective

- Hilgert, J.N., Jakobs, C. et al. (2025) 'Chances and Challenges of the Model Context Protocol in Digital Forensics and Incident Response'. Read Here

- Dig Deeper into Langchain's description of the Model Context protocol here.

- Know more in detail about MCP's Security Risks through Red Hat's Analysis of MCP Vulnerabilities

AI Innovation

LLM

Generative AI

Contact Us

Fill up the form and our team will get back to you within 24 hrs