Understanding Natural Language Processing (NLP) Essentials

Introduction to Natural Language Processing

This article aims to cover what natural language processing is in layman's terms with visual and code examples. We will have a different approach in understanding NLP unlike other articles out there for NLP basics.

Let’s start with the human approach to understanding things and gradually get on with mathematical concepts in depth in a series of articles rather than directly jumping into terminologies or use cases of NLP in the Machine Learning and statistical computing domains.

What is Natural Language Processing(NLP)?

Few definitions of NLP:

Natural language processing (NLP) refers to the branch of computer science — and more specifically, the branch of artificial intelligence or AI — concerned with giving computers the ability to understand text and spoken words in much the same way human beings can.

Natural language processing (NLP) is a machine learning technology that gives computers the ability to interpret, manipulate, and comprehend human language.Natural language processing (NLP) is a machine learning technology that gives computers the ability to interpret, manipulate, and comprehend human language.

(Source: https://aws.amazon.com/what-is/nlp/)

In the most basic terminology, Humans use multiple languages to communicate in terms of text and speech. However, still, machines are not so advanced (still far from representing human consciousness mathematically) to understand these languages natively or learn them as humans do.

Natural Language Processing, is a branch of Artificial Intelligence that provides methods via which machines can make some sense of those native languages via mathematical equations and advanced machine learning techniques.

How does NLP actually work?

How Natural Language Processing(NLP) Works? - Scalifi Ai

Let us start by thinking about how did we learn the language that we are communicating in?

There are different strategies for learning and understanding a language depending on if you already know any other language and your age group. To better understand NLP let's consider how a child learns and starts understanding a particular language:

- Children start understanding a language through interaction - not only with their parents and other adults but also with other children. All normal children who grow up in normal households, surrounded by conversation, will acquire the language used around them.

- Our brain continuously tries to understand the meaning of words, what they mean, and what they represent. Over a period of time, this collective knowledge helps us in forming meaningful sentences and express our thoughts.

As machines work on pure numbers and understand only numbers we need a way to convert our language, words, and their correlation into a numerical representation. This is done by building Word Embeddings which try to capture the meaning of words and how they are related to other words.

Introduction to Neural Word Embeddings in NLP

(Source)

Word embeddings are vector representations of a collection of words (vocabulary) which are then used to create a high-level representation of any sentence using those vectors.

The most common word embeddings include Glove and Word2Vec. These embeddings are pre-trained on a large corpus of data with different training methods and models like Bag of Words (BOW) and Skip N-Gram training methods. (In this article we will not get into the depth of how these word embeddings are created and trained)

This means that each word is represented by an array of numbers, if we calculate the cosine similarity between two words that gives us what their semantic similarity is.

Eg: Based on pretrained embedding “glove-twitter-25”

If we calculate the cosine similarity between the words “cat” and “dog” it comes out to be 0.95908207

And if we calculate the cosine similarity between the words “cat” and “france” it comes out to be 0.36348575

This shows the semantic similarity between the chosen words and in turn helps any machine learning model to understand the English language better semantically. We can clearly see that the value for words “cat” and “dog” is way higher (as they are both animals that too four legged) and the value between “cat” and “france” is way lower (as one is an animal and one is a country)

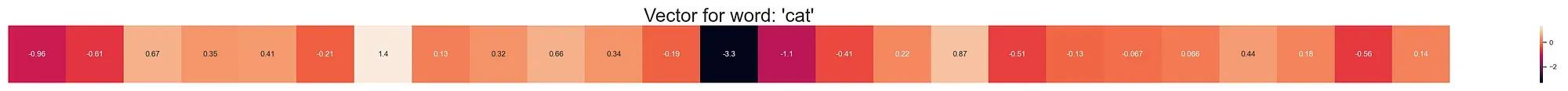

Following are the vector representations of the words discussed above:

Vector representation for word cat NLP - Scalifi Ai

Vector representation for word dog NLP - Scalifi Ai

Vector representation for word France NLP - Scalifi Ai

Let's better understand the above examples with a 3D visualization.

I hope the above plot gives a better understanding of what the vectors represent and how similar words can be easily differentiated if the word embedding is trained properly on a clean and well-processed corpus

The above illustration is just for understanding purposes and has been generated by compressing each word vector to have only 3 values (x, y, z) so that we can visualize it.

This visualization gives us a better understanding of the vector overall despite having only 3 dimensions, hence any machine learning model utilizing pre trained word embeddings gets better semantic understanding of words as they have even more dimensions / granularity to play with. Few pre trained word embeddings go up to 300 values per vector for better granularity and performance.

Conclusion

I hope this article serves as an overview of how machines are able to understand natural languages and perform complex calculations over them for applications like Named Entity Recognition, Auto Correct and Autocomplete tasks, Text generation based on prompts, etc.

In the upcoming articles, we will see how we can train our own embeddings, different ways of training (their pros and cons), and use them in real-life applications.

To have your own NLP space and to get a better understanding of AI Concepts without the compulsion of writing code, you can head onto Scalifi Ai, which is a No-Code platform built for creating artificial intelligent pipelines and services.

External References

Natural Language Processing

NLP

AI in Education

AI in Healthcare

AI Innovation