The Key to Removing AI Slop - Memory Managed AI Agents

This blog is for developers, engineers, product managers, technical leaders, AI researchers and architects, enterprises, startups, and AI enthusiasts.

If you’re new to AI, we suggest reading The Beginner's Guide to AI Models: Understanding the Basics and In-Depth Study of Large Language Models (LLM) to understand the contents of this article better.

TL;DR

- The Problem: AI slop is a memory problem. Stateless agents forget users instantly, leading to generic, context-free output.

- The Solution: A two-part memory architecture. Tactical Short-Term Memory (STM) for immediate context, and strategic Long-Term Memory (LTM) for persistent knowledge.

- LTM Design: Building LTM requires key decisions on what to store (facts, experiences, rules) and when (real-time synchronous vs. fast asynchronous updates).

- The Mandate: Mastering this memory blueprint is non-negotiable. It is the only way to build intelligent agents that eliminate slop and deliver real value.

Introduction to Dealing with AI Slop

It’s 2025, and AI adoption keeps increasing at a rapid pace. So does AI slop. Harvard recently found that 40% of us receive AI slop from our colleagues. As bad as that may seem, using AI isn’t the problem. It’s actually the neglected aspect of AI, Memory.

Without memory, LLMs/Agents are digital amnesiacs. They produce generic, context-free output because they can't recall past interactions, preferences, or your corrections. This is the very engine of AI slop.

The solution is a robust memory architecture. This system enables agents to maintain contextual continuity and deliver personalized experiences, distinguishing between short-term recall for ongoing tasks and long-term knowledge for lasting relationships.

But this power is a double-edged sword. Poorly managed memory creates slow, irrelevant, and costly agents. Get it wrong, and you've built a liability. Our blog dives deep into mastering agent memory, ensuring your AI gives intelligent responses instead of AI slop.

What is AI Memory?

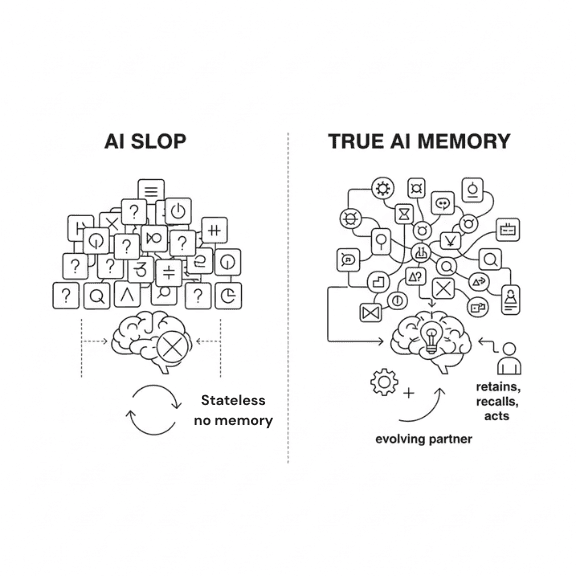

To defeat AI slop, we must first understand its source. The generic, disconnected responses that flood our inboxes stem from a fundamental design flaw in most language models: they are stateless. Each prompt is an island, processed in isolation without any real recall of what came before. The “memory” of an out-of-the-box LLM isn’t memory at all; it’s just patterns baked into its parameters during training.

AI Slop VS Managed Memory

True AI memory is the antidote. It’s a dynamic system engineered to let agents retain, recall, and act on information from past interactions. It’s not just a database. Instead, it’s an active component in the decision-making loop, turning a forgetful calculator into an intelligent, evolving partner.

The High Stakes of Getting Memory Right

Why is this architectural shift so critical? Because without it, AI agents remain stuck in a loop of mediocrity. Integrating a proper memory function unlocks a cascade of mission-critical advantages that separate truly advanced AI from the slop generators.

First, it delivers context-rich responses. By retaining both short-term conversational flow and long-term user facts, the agent maintains continuity, delivering personalized and relevant outputs every time.

Second, it mitigates hallucination. Grounding responses in a verifiable memory store anchors the agent in reality.

Third, it drives brutal efficiency. Intelligent retrieval avoids redundant API calls and pointless database queries, slashing costs and accelerating processes.

Ultimately, memory is the foundation for adaptation. It allows an agent to learn, evolve, and handle complex, multi-step tasks—transforming it from a simple tool into an indispensable asset.

The Blueprint: Short-Term vs. Long-Term Memory

Not all memory is created equal. A robust agent architecture relies on a cognitive blueprint that mirrors human memory, separating recall by scope and persistence. This distinction is the first and most critical step in building an agent that can actually think.

Short-Term Memory (STM) is the agent’s working consciousness. It’s thread-scoped, holding temporary information within a single, ongoing conversation. Think of it as the agent's RAM, keeping track of the immediate context to ensure a coherent dialogue. It’s powerful but volatile and quickly hits the limits of the context window.

Long-Term Memory (LTM) is the agent’s permanent knowledge base. It persists across sessions and conversations, storing crucial information that defines the agent’s intelligence over time. This is where an agent stores facts about a user (semantic memory), recalls past events to inform future actions (episodic memory), and refines its own operational rules (procedural memory).

Mastering the interplay between STM and LTM isn’t just an option; it's the only way to build an agent that removes AI slop instead of creating it. Let’s take a deeper look at the two to understand how they work.

Short-Term Memory (Thread-Scoped)

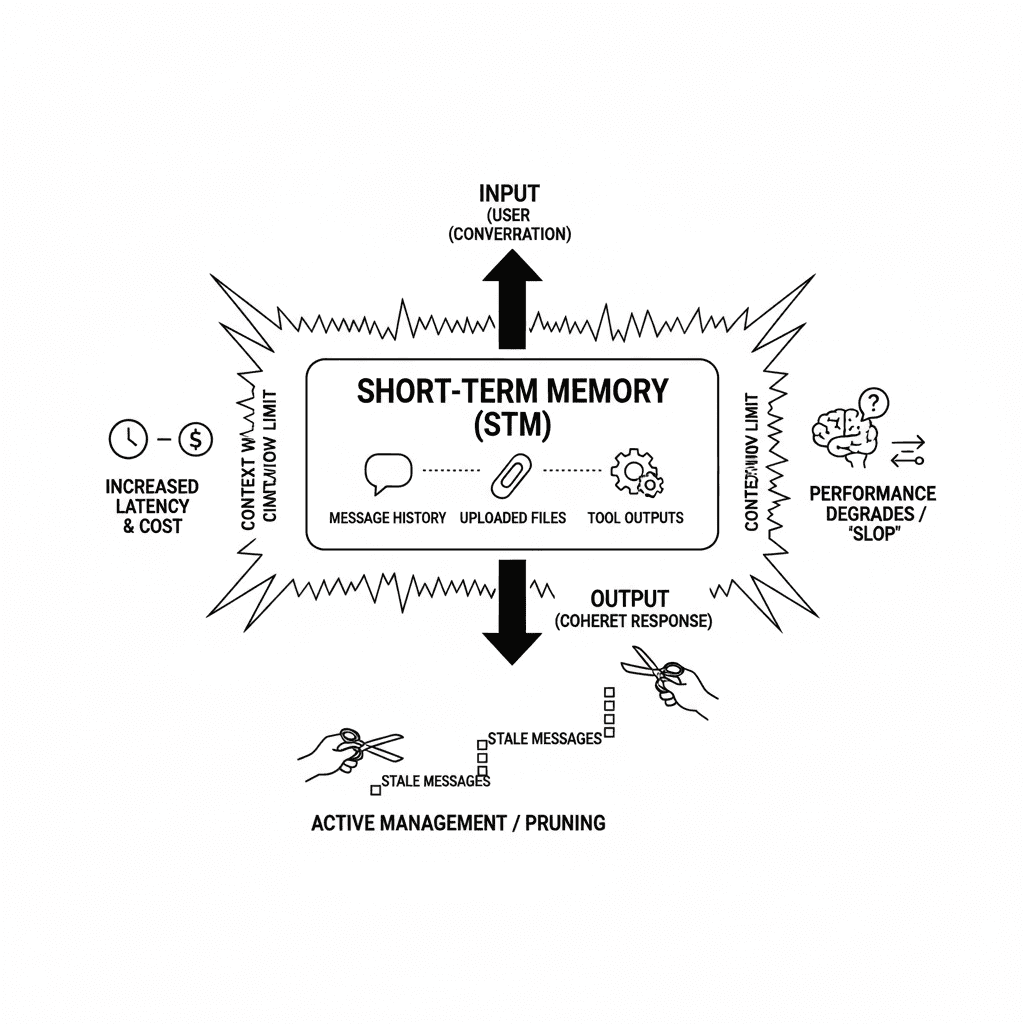

We've established the blueprint. Now, let's dissect its front line in the war on AI slop: Short-Term Memory (STM). This is the agent’s working consciousness—a temporary, thread-scoped memory that ensures coherence within a single conversation.

Think of it as the agent's tactical RAM. It holds all the immediate context, the message history, uploaded files, and recent tool outputs—within the LLM’s limited context window. By managing this state, STM prevents the agent from losing its place, providing the moment-to-moment continuity required to generate relevant, non-slop responses.

Short Term Memory

But this tactical advantage is fragile. STM lives and dies by the context window, and this creates a dangerous paradox. As a conversation deepens, the history grows, pushing the agent toward a hard limit. The window overflows. Performance degrades. The model gets 'distracted' by stale information, driving up latency and cost. Suddenly, your primary defense against slop becomes a new liability—a slow, expensive, and confused agent.

Therefore, managing STM is a high-stakes balancing act. It is absolutely essential for defeating immediate, conversational slop, but its inherent volatility and limitations prove it's only half the battle. You must actively manage it, pruning stale messages to maintain peak performance.

Beginner’s Example:

Think of an AI’s Short-Term Memory like a barista. A good one remembers your complex order perfectly for one transaction. But an overloaded one gets confused and hands you the wrong drink.

That wrong drink? That’s AI slop, created when the agent's immediate memory is flooded.

Which brings us to the most important component of AI slop management using memory - Long Term Memory.

Long-Term Memory: The Strategic Solution to Slop

If STM is the agent's tactical RAM, Long-Term Memory (LTM) is its strategic hard drive. STM wins the immediate battle, but its memory is wiped after every encounter.

LTM is what wins the war. It's the persistent, cross-session architecture that lets an agentic AI system retain knowledge, learn from experience, and truly evolve.

This system ensures an agent remembers you—and your preferences—tomorrow, next week, and next year, turning it from a tool into an intelligent partner.

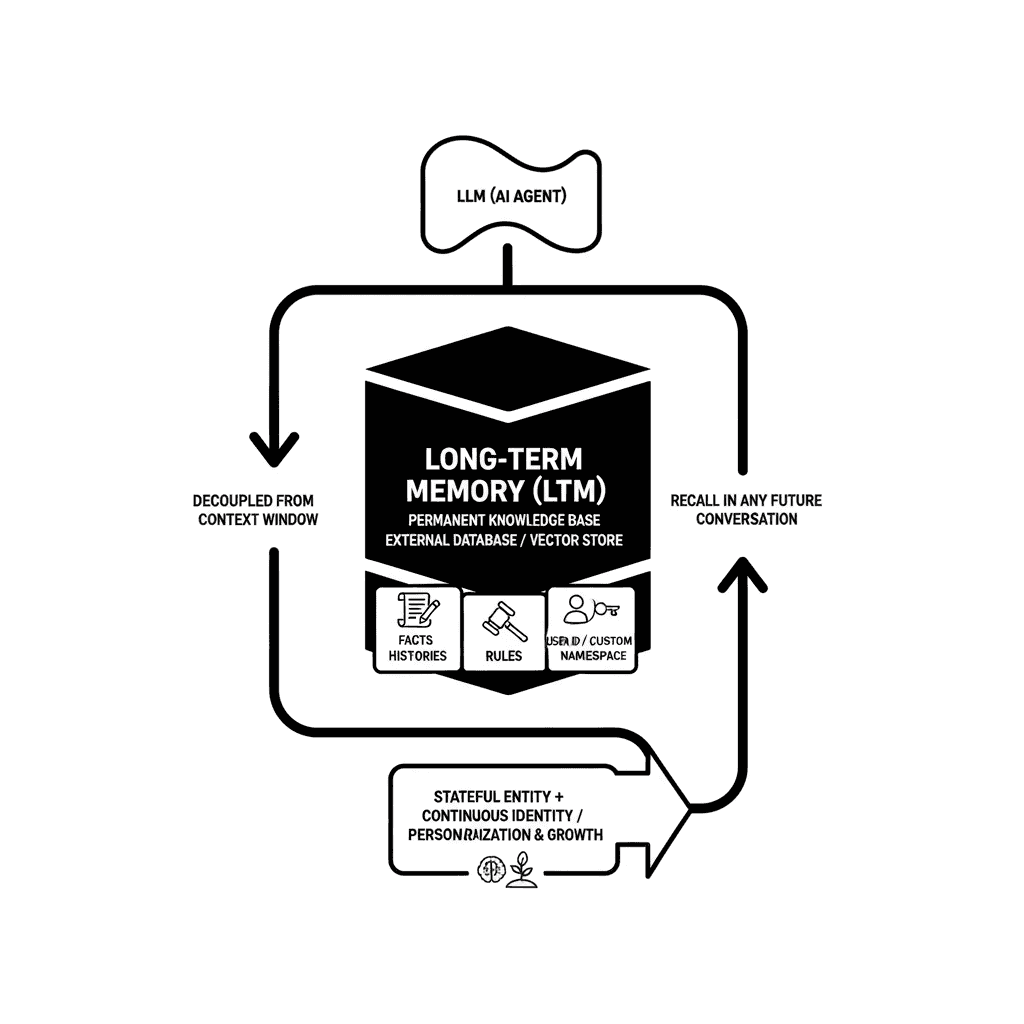

Definition and Characteristics

Unlike the fleeting memory of a single chat, LTM is a permanent knowledge base stored externally in databases or vector stores. It is completely decoupled from the LLM’s volatile context window and isn't organized by temporary 'threads.' Instead, it uses a custom, permanent namespace, like a user ID.

Long Term Memory

This structure allows an agent to build a lasting repository of facts, histories, and rules that can be recalled at any time, in any future conversation.

It transforms the agent from a stateless machine into a stateful entity with a continuous identity, capable of true personalization and growth.

Key Design Questions

Implementing LTM is not a one-size-fits-all problem. Building a brain is complex. Get it wrong, and you create a disorganized mess.

Key Design Questions for LTM

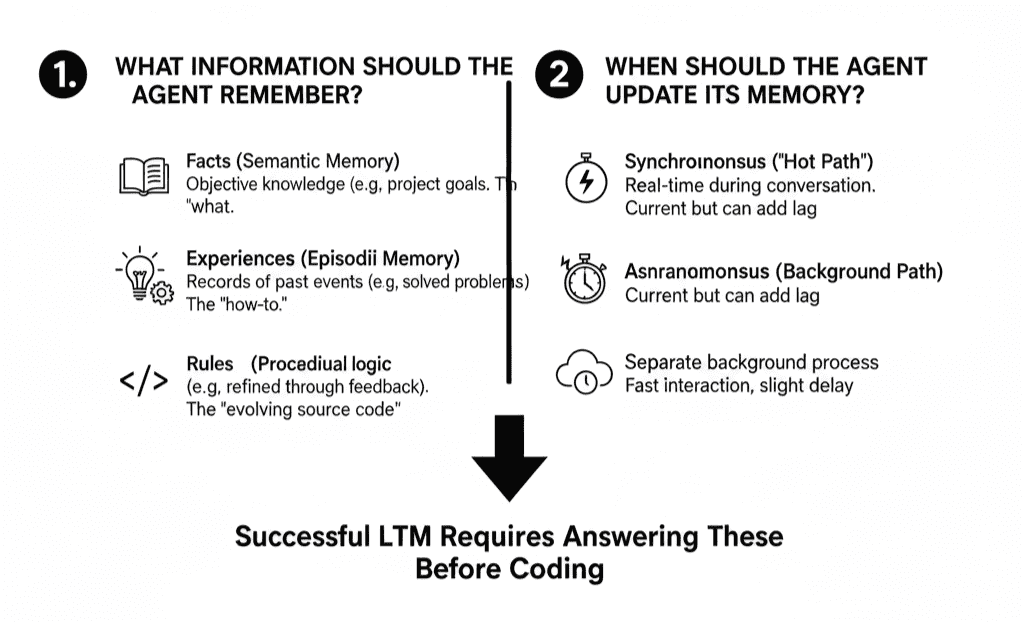

Success requires answering two fundamental questions before you write a single line of code:

1. What information should the agent remember?

The type of information dictates the agent’s capabilities. We can break it down into three critical categories:

- Facts (Semantic Memory): Objective knowledge. What are the user's project goals? This is the 'what' that grounds the agentic AI system in reality.

- Experiences (Episodic Memory): Records of past events. How did the agentic AI system solve a similar problem before? This is the 'how-to' learned from doing.

- Rules (Procedural Memory): The operational logic an agentic AI system follows. This is the agent's evolving 'source code,' refined through feedback.

2. When should the agent update its memory?

The timing of memory updates is a critical trade-off between immediacy and performance.

- Synchronously ('Hot Path'): Memory is updated in real-time during the conversation. This ensures knowledge is current, but can add noticeable lag.

- Asynchronously ('Background Path'): Memory is updated as a separate background process. This keeps the interaction fast but risks a slight delay in recall.

Types of Long-Term Memory

We've established the critical design questions. Now we execute. Answering 'what to remember' means mastering the three pillars of an agent's long-term brain: Semantic, Episodic, and Procedural memory.

Each serves a unique, non-negotiable purpose in the war on AI slop. Understanding how to build and manage them is the difference between an agent that learns and one that is doomed to repeat its failures.

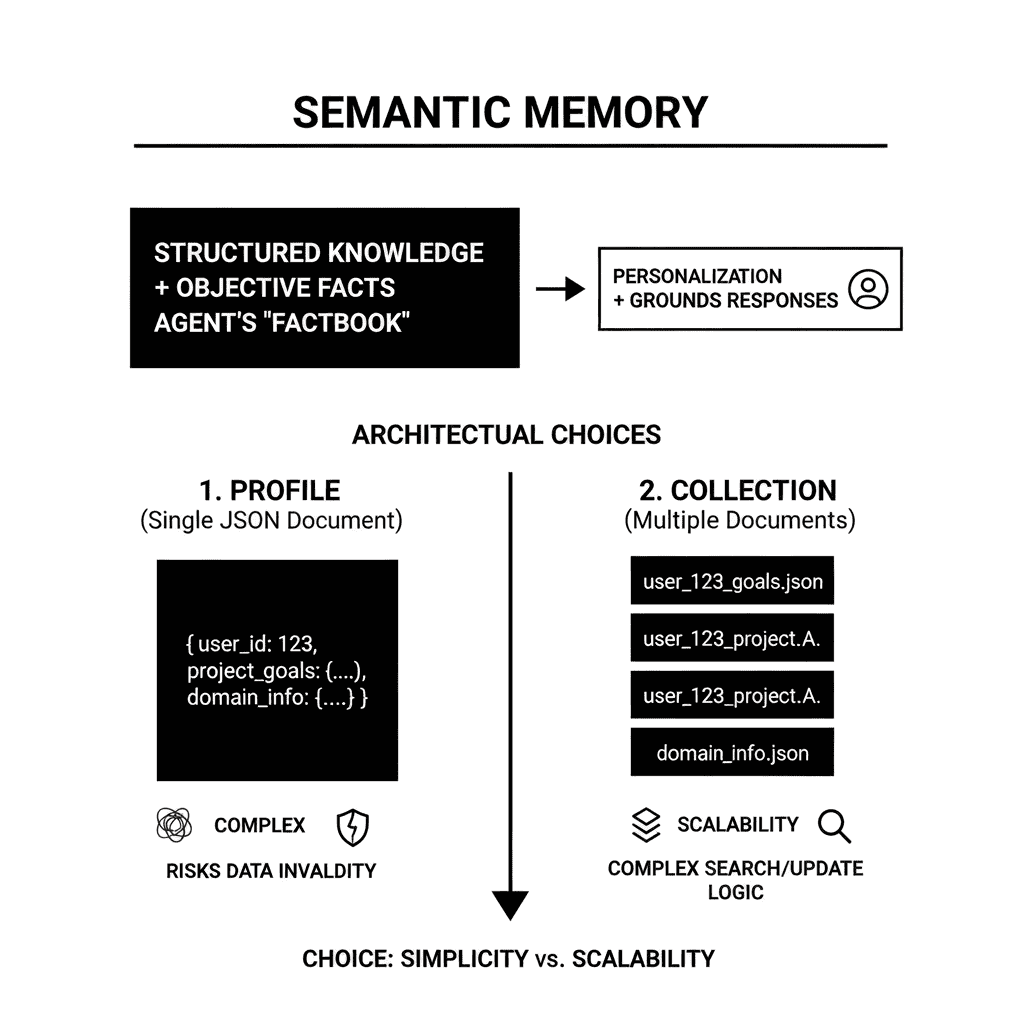

1. Semantic Memory (The Factbook)

Semantic Memory is an agentic AI system's repository for structured knowledge. It stores the objective facts and concepts needed to ground its responses in reality and deliver true personalization.

This is where an agent remembers key details about a user, a project, or a domain. It is the agent's core 'factbook,' ensuring it never has to ask the same question twice. This is distinct from 'semantic search,' which is a retrieval technique.

Key Semantic Memory

Developers face a critical architectural choice in how to store these facts. The decision carries a significant trade-off between simplicity and scalability.

- Profile: A single JSON document per entity. It’s simple to start, but it grows complex and error-prone over time, risking data invalidity.

- Collection: Multiple, narrowly scoped documents. This approach scales far better but shifts complexity to the search and update logic.

Example: Think of Semantic Memory as your AI's 'factbook.' You tell it your client is 'ACME Corp' and the project deadline is next Friday.

It stores these objective facts permanently. Now, it can provide smart, personalized reminders and never has to ask you again, eliminating repetitive, sloppy interactions.

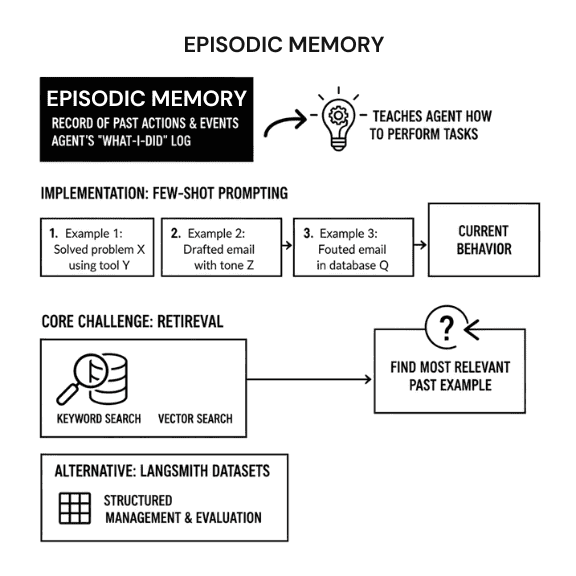

2. Episodic Memory (The Experience Log)

An agent that only knows facts is still unintelligent. It must also remember its experiences, the past actions and events that teach it how to perform tasks correctly.

This is Episodic Memory, the agent's record of what it has done. It is most commonly implemented through few-shot prompting, where the agent is shown examples of past successes to guide its current behavior.

Episodic Memory

The core challenge isn't storing these experiences, but retrieving the right one at the right time. This typically relies on keyword or vector-based search to find the most relevant past example.

For more granular control, developers can use alternatives like LangSmith datasets, which allow for a more structured approach to managing and evaluating these powerful examples.

Example: Think of Episodic Memory as the AI’s muscle memory. The first time your agent generates a sales summary, you approve its format.

The agent saves this successful 'episode.' Now, every time you ask for a summary, it recalls that specific winning formula and repeats it, avoiding guesswork and slop.

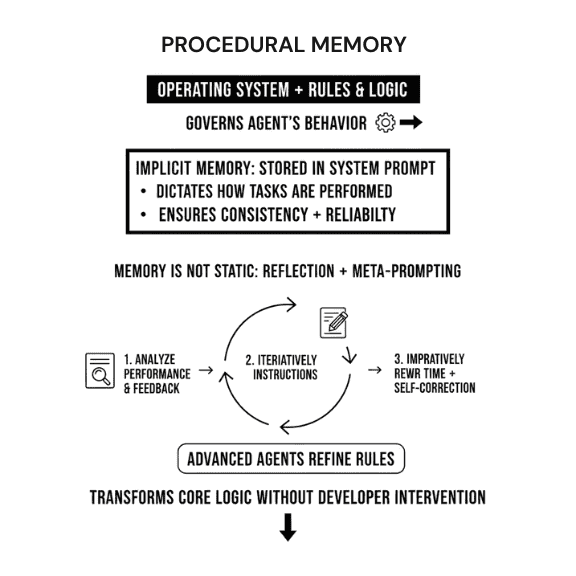

3. Procedural Memory (The Rulebook)

Finally, an agentic AI system needs an operating system. Procedural Memory is the set of rules, instructions, and operational logic that governs the agent’s behavior.

This 'rulebook' is most often stored within the agent’s system prompt. It is the implicit memory that dictates how the agent should perform its tasks, ensuring consistency and reliability.

Procedural Memory

But this memory is not static. The most advanced agents refine their own rules through a process called 'Reflection' or meta-prompting, where they analyze their performance and feedback.

By iteratively rewriting their own instructions, agents can learn from their mistakes and improve over time, transforming their core logic without direct developer intervention.

Example: Procedural Memory is an AI's internal 'rulebook.' Its starting rule might be: 'Always respond formally.'

But after you repeatedly say, 'Be more casual,' the agent reflects on this feedback. It then rewrites its own core instruction to 'Adopt a friendly, casual tone.' It upgrades its own operating system.

Writing and Storing Long-Term Memories

Knowing what to remember is only half the battle. The other half is execution. The engineering choices you make, how and when an agent writes to its memory, directly impact its performance.

Get it wrong, and you build a slow, outdated, or disorganized agentic AI system. Get it right, and you create a system that consistently avoids generating AI slop by accessing the right information at the right time.

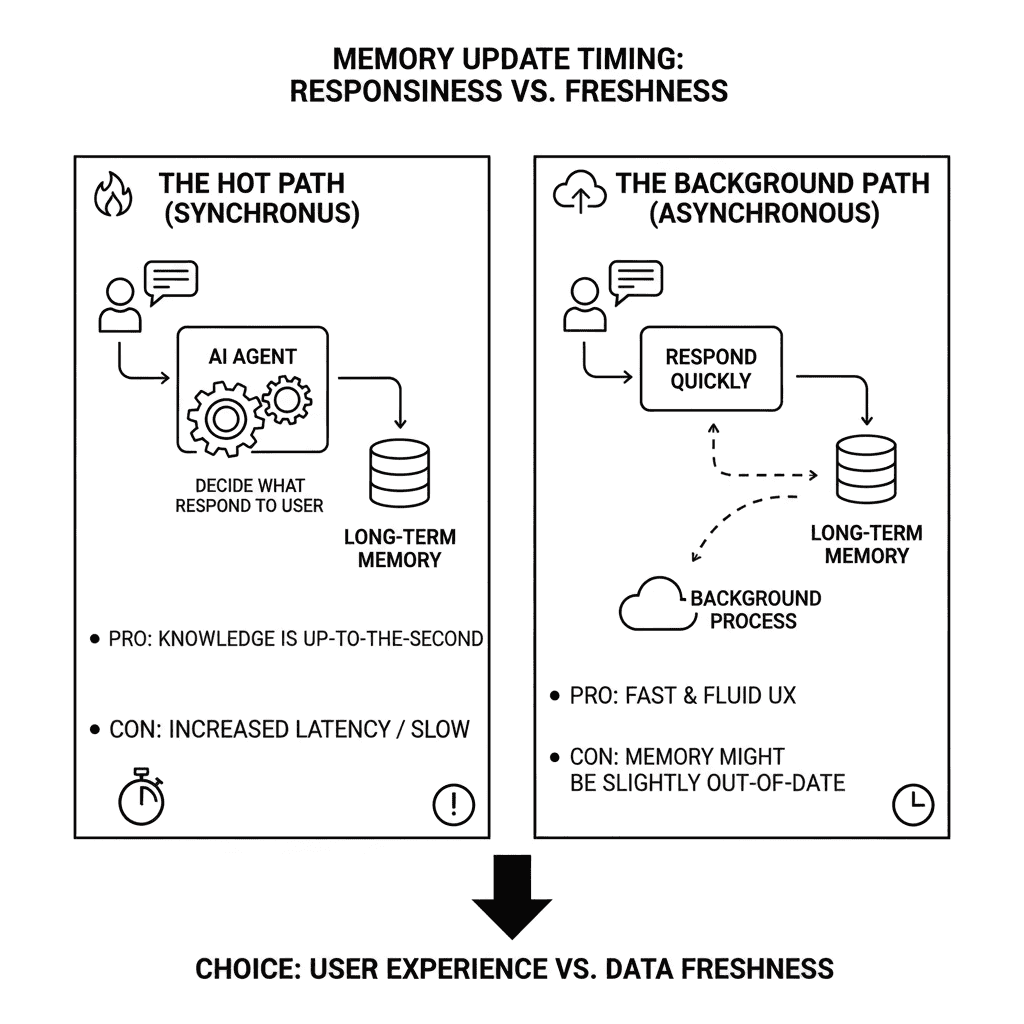

A. Writing Methods: The Speed vs. Immediacy Trade-Off

The most critical decision is when to commit information to memory. This choice presents a fundamental trade-off between the agent's responsiveness and the freshness of its knowledge.

The Speed vs. Immediacy Trade-Off

You must choose between two paths, each with direct consequences for the end-user experience and the quality of the agent's output.

The Hot Path (Synchronous Updates)

This method updates memory in real-time, during the live conversation. The agent decides what to save before it even responds to the user.

Pro: The agent’s knowledge is always up-to-the-second. This immediate reflection is powerful for maintaining context across rapid interactions.

Con: It creates a performance bottleneck. The agent has to think and write simultaneously, which increases latency and makes the conversation feel slow.

The Background Path (Asynchronous Updates).

This method offloads memory creation to a separate, background process. The agent focuses solely on responding to the user quickly.

Pro: The user experience is fast and fluid. Separating the logic eliminates lag and allows the agent to focus entirely on its primary task.

Con: The agent's memory might be slightly out of date. This requires smart scheduling to ensure new information becomes available before it's needed.

Example: Hot Path - A chef takes your order, then immediately writes down every ingredient for later, right as you wait. It's perfectly accurate but slow.

Background Path - The chef takes your order, starts cooking, and a quiet assistant writes down the details later. Fast service, but a tiny chance the assistant missed a detail.

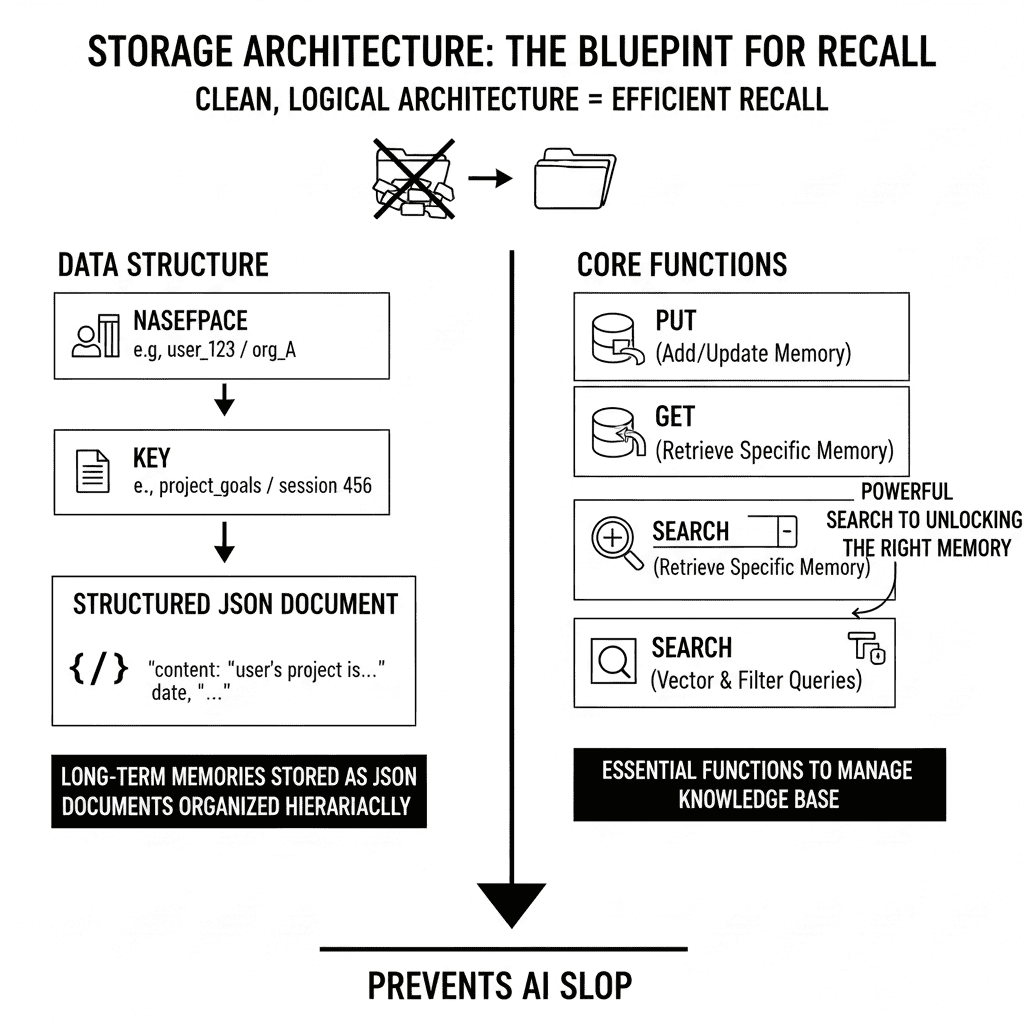

B. Storage Architecture: The Blueprint for Recall

A brilliant memory is useless if it's stored in a digital junk drawer. A clean, logical storage architecture is non-negotiable for efficient recall.

Without it, the agent can't find the information it needs, leading directly to the generic, context-less responses that define AI slop.

Storage Architecture

Data Structure

Long-term memories are stored as structured JSON documents. This data is organized using a simple yet powerful hierarchy to prevent chaos.

Namespace: The organizational scope, like a folder. This is typically a user ID or an organization ID.

Key: The unique identifier for the memory itself, like a filename within the folder.

Core Functions

This architecture is brought to life with a few essential functions that allow the agentic AI system to manage its knowledge base effectively.

The store must support put (to add or update a memory), get (to retrieve a specific memory), and, most importantly, search with advanced vector and filter queries. A powerful search is the key to unlocking the right memory at the right time.

Example: Think of an AI's memory as a well-organized library. Each 'namespace' is a dedicated shelf (like 'Client X projects'), and each 'key' is a specific book title (like 'Q3 Sales Report').

Without this clear system, it's just a chaotic pile of books. The AI can quickly 'put,' 'get,' and 'search' for exactly what it needs.

Cognis Ai - A Philosophy to Solve AI Work Slop

Back in 2024, we noticed that a majority of the AI systems being used by us and our partners were generating slop. It was hurting our productivity by up to 80% across projects.

But being an organisation that focuses on incorporating AI Agents for its employees, we put our minds to solving the problem.

During the process, we tinkered with memory management, and after 9 months, we have finally been able to create an agentic AI system that uses memory correctly.

Cognis AI not only uses Short Term and Long Term Memory but also gives complete access to you regarding what your LLM should store and ignore.

Rather than keeping it to ourselves, we’ve decided to launch Cognis Ai to the larger community to define a better future of work inclusively for everyone. You can click here to join our waitlist and become one of the first members to guide the development of a truly human + AI collaborative system for the world.

Memory - The Building Block of Intelligent AI

We've dissected the problem and the blueprint. The verdict is clear: AI slop is not a bug. It's a feature of lazy design—the predictable result of building powerful agents with no memory.

The cure is a disciplined approach. It demands balancing the tactical coherence of Short-Term Memory with the strategic, evolving knowledge base of a well-architected Long-Term Memory.

This requires mastering critical trade-offs in what to store—facts, experiences, or rules—and when to store it. Your choices between synchronous and asynchronous updates define your agent's intelligence.

This isn't just about fixing slop. It's about unlocking the future of truly adaptive AI. Building agents that remember is how we ensure they grow smarter, not just older.

External References

- Harvard Business Review: AI Generated Workslop is Dsetroying Productivity. Read the Article

- CNBC: Work AI-generated ‘workslop’ is here. It’s killing teamwork and causing a multimillion dollar productivity problem, researchers say. Read the Article.

- The guardian: AI tools churn out ‘workslop’ for many US employees, but ‘the buck’ should stop with the boss. Read the Article

- Forbes: Why AI ‘Workslop’ Kills Productivity—And How To Prevent It. Read the Article

AI Innovation

Generative AI

LLM

Contact Us

Fill up the form and our team will get back to you within 24 hrs